During a recent conference, our technical director, Panda, was invited to share the future development of Web 3D engine technology and to have a warm, in-depth and extensive discussion on how to build a Web 3D engine. Panda gave a speech on "Creating next-generation Web 3D content based on WebGPU". He's happy to share this presentation with our international developers.

The amazing Web 3D examples using the Cocos engine

Today I'm going to share the theme of "Cocos WebGPU-based Next Generation Web 3D Content" Cocos wants to use technology to drive efficiency in the digital content industry, not only in games but also in metaverse, cars, virtual characters, XR, and other industries.

Cocos Web 3D Demo:Lake

Web-based deferred rendering pipeline is a relatively rare and complex rendering pipeline on the Web side. This demo includes reflection, ssao, bloom, fsr, taa, and other advanced effects.

Cocos Web 3D Meta Universe: The Beauty of True Space

The metaverse application made by Midea based on the Cocos engine has a lot of playable space and very good rendering. This is currently a benchmark-level metaverse application on the market, and its reach advantage using Web-side distribution is also very obvious.

Cocos Web 3D Meta-Universe: WAIC Metaverse Without Borders

Cocos for the World Artificial Intelligence Conference created an online venue, including the native-side and web-side, and also released a VR-side. You can use PICO and Oculus devices to experience it.

Cocos Web 3D Game: Code Name Sword Immortal

Based on Cocos Creator, it has beautiful pure 3D graphics. It has cross-platform features to achieve multi-platform data interoperability.

The Future of Web 3D

Web 3D is suitable for many application scenarios and is a very pragmatic option because of its low cost and ability to reach all users. No matter what operating system, platform, or environment they are on, they can access your created content. So we also think that in the metaverse era, Web-based 3D will become a mainstream access platform and content-building platform.

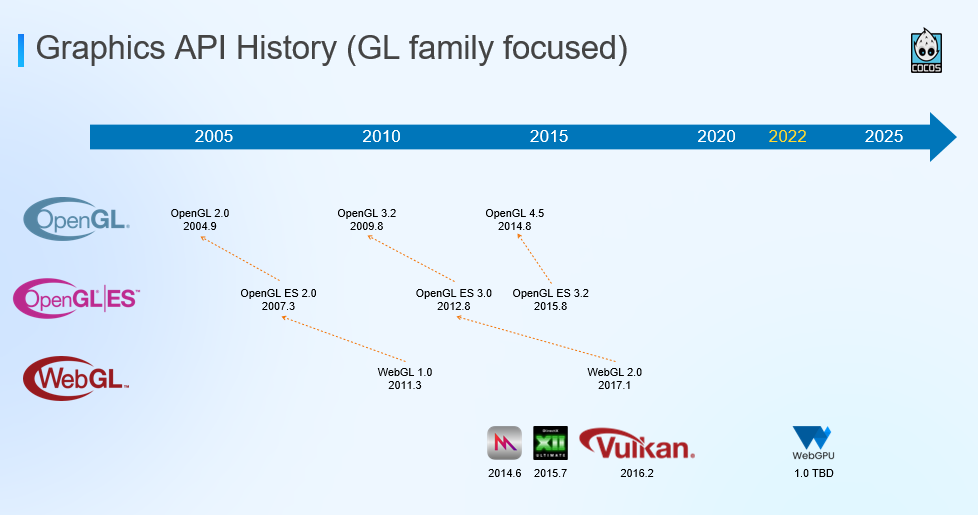

From the development history, the future of Web 3D is Web GPU, from OpenGL to OpenGLES, and then to WebGL, including the web-side, although the service is different platforms. But today, it is gradually being replaced by the next generation of APIs.

Vulkan has replaced OpenGLES, and the next generation of WebGL, WebGPU, is being developed, encapsulating all the next-generation rendering graphics backends: Metal, Vulkan, and DirectX. WebGPU is designed with cross-platform in mind.

2014, 2015, and 2016 saw the birth of Apple's Metal API, DirectX 12 for Windows and Xbox, and Vulkan for Khronos, covering iOS, MacOS, Windows, Android, and other platforms, respectively.

In 2017 the W3C GPU for the Web Working Group was formed to design the next-generation graphics interface WebGPU for Web platforms. All browser kernel vendors have announced that they will support WebGPU in their own browsers in the future.

In 2018, OpenGL ES was deprecated in iOS 12, and OpenGL was abandoned in MacOS Mojave.

In terms of historical development, the GL family has completed its historical mission and needs a new generation of APIs to replace it.

The next-generation graphics API's first feature is the Command Buffer's design.

Traditional OpenGL submits instructions directly to the GPU, whether switching the rendering state, switching the pipeline state, or rendering a DrawCall. It is directly submitted to the GPU, and the GPU executes it directly. The modern graphics API is first recorded into the Command Buffer and then submitted to the GPU to execute all the Command Buffers together, becoming a deferred mode rendering. This mode helps us better understand the entire rendering path and process and also supports multi-threaded parallel recording, which is very important because more and more content is being rendered, especially in the metaverse, which requires a very large 3D scenes, a lot of objects and characters, need a lot of Command Buffer. A multi-threaded parallel recording is a great advantage on the CPU side.

The second is the Pipeline State Object. PSO abstracts the rendering pipeline state of the entire GPU. In the past, OpenGL was a state machine. No matter what was changed, it was directly changed. But in the next-generation graphics API, PSO becomes an object of controllable GPU rendering pipeline state switching, which can be used to switch the entire GPU rendering pipeline directly.

The third is Resource Binding, which is managed independently through upper-level Resource Binding. Pipeline Layout and Descriptor Set Layout, these two objects collect all the information related to cameras, materials, and objects in all scenes, form a PSO one by one, and hand it over to the GPU to switch between PSOs, so one state flows to the next state. This method can make the entire rendering pipeline more controllable, and the degree of freedom is entirely handed over to the upper-level developers. It can be very efficient because you can better manage the switching between states.

The fourth is Thin Driver, from implicit prediction to explicit declaration. The driver layer of the next-generation rendering graphics API is very thin. From OpenGL to predict what kind of operations are required at the GPU layer through the Driver layer, it has to be declared independently, including display synchronization management, Barrier Synchronization management, and memory management.

Things like Resource Binding and Binding layout need to be managed manually so that the graphics program has stronger control and greater responsibility. We believe that such a future can make the entire pipeline more suitable for various application scenarios and applications. Because when the driver is particularly thick, the driver needs to predict the general requirements, and when the developer has more control, he can make more targeted optimization for his own graphics application.

WebGL is the mapping of OpenGL ES, and WebGPU is an abstraction that unifies modern graphic interfaces. It will directly connect to all current graphics interfaces instead of a mapping relationship such as OpenGL.

WebGPU's runtime is directly packaged and connected to all graphics backends, including dawn/gfx-rs/WebKit. Google's dawn implementation is actually a good reference for modern graphics backend packaging.

Finally, WebGPU supports more GPU features, including Compute Shader, Ray Tracing, etc. WebGPU will support Compute Shader naturally, which is a good reason for us to welcome the future.

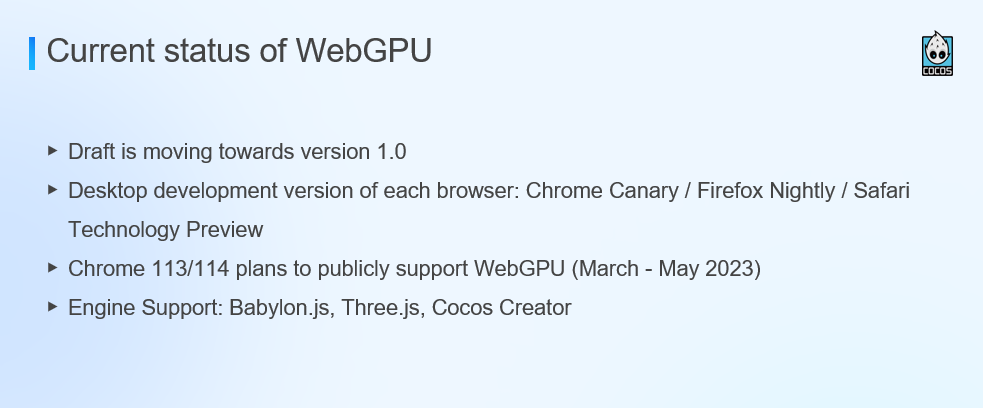

Regarding the current status of WebGPU, all browser manufacturers are gradually connecting, especially Chrome, which will support WebGPU from March to May 2023.

Currently, mainstream engines support is Babylon, Three.js, and Cocos Creator.

Cocos embraces next-generation graphics standard GPU

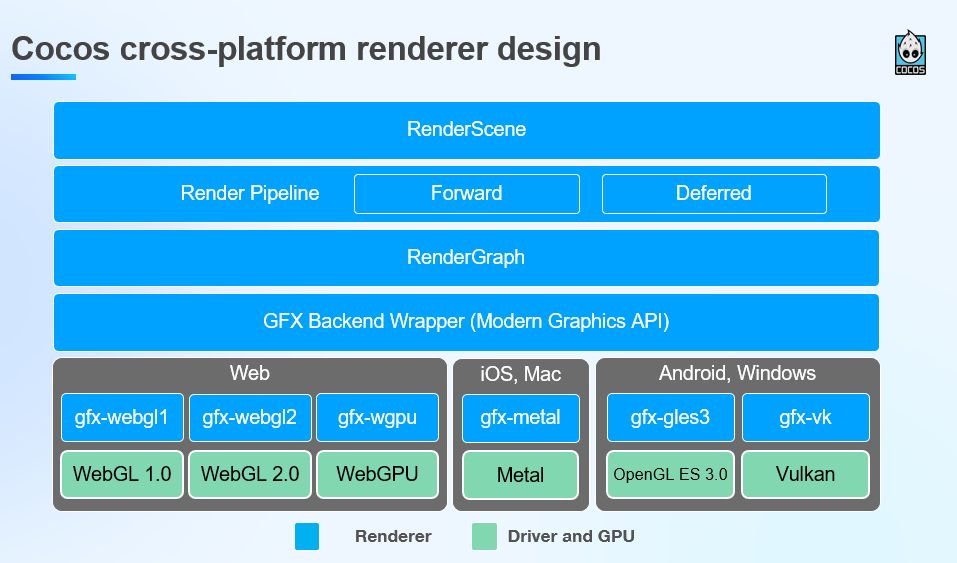

Cocos is a cross-platform renderer design reflected in the GFX package. From the bottom layer, the adaptation of our GFX to different graphics APIs is unified into a set of interfaces. This interface is actually relatively close to modern graphics APIs. It supports concepts such as Command Buffer, PSO, Pipeline State Layout, Pipeline Layout, and Descriptor Set Layout. So it is very fast and straightforward to adapt Cocos to WebGPU.

One level up, we have our own RenderGraph design. RenderGraph further abstracts the information in the upper layer rendering scene, then hands it over to the GFX layer. Here we have done a lot of optimization work, including collecting materials, scenes, and PSOs in models, organizing them with Descriptor Set Layout, combining them with Pipeline Layout, deriving PSOs, curing them in advance, and finally rendering as much as possible only switching between PSOs. This is a very efficient optimization.

At the same time, we also turned the entire rendering pipeline into a Render Graph rendering pipeline. We will first deduce the rendering pipeline and execute the entire rendering pipeline to generate all Command Buffers. We also implemented the forward pipeline and the delay pipeline based on RenderGraph, which also received excellent results.

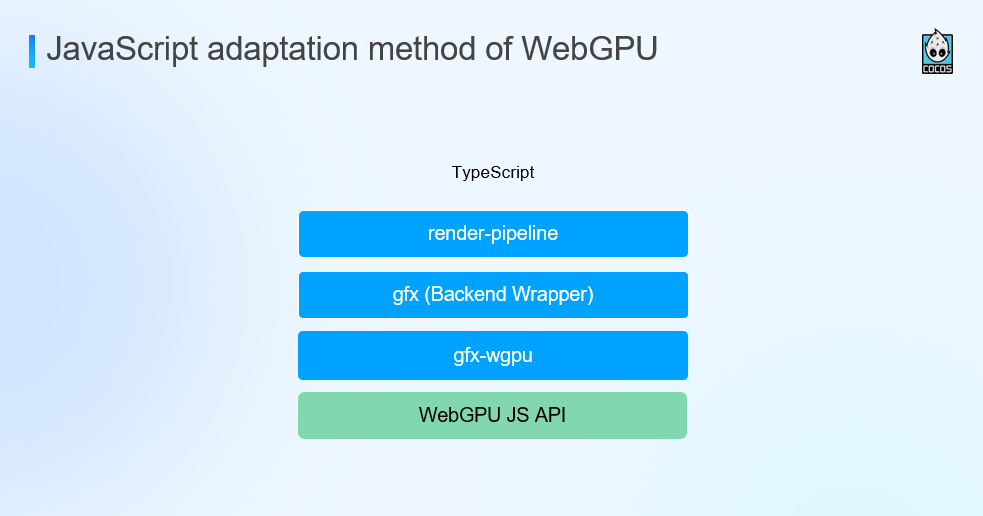

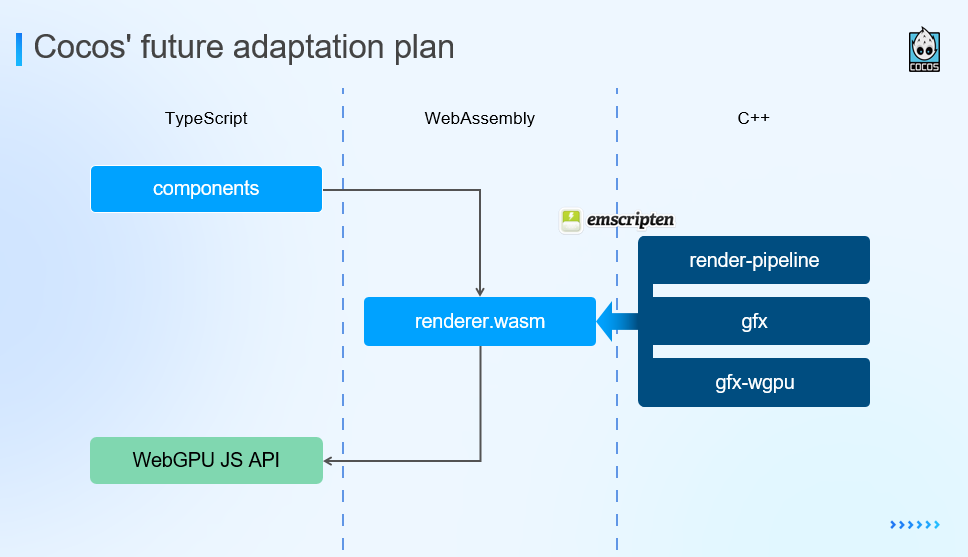

On WebGPU, our first encapsulated implementation is based on JavaScript. We implemented the entire gfx-wgpu in TypeScript to connect to the WebGPU JavaScript API, which is directly used by the render-pipeline of TypeScript. It was realized in March last year, but due to practical considerations, it did not continue to advance.

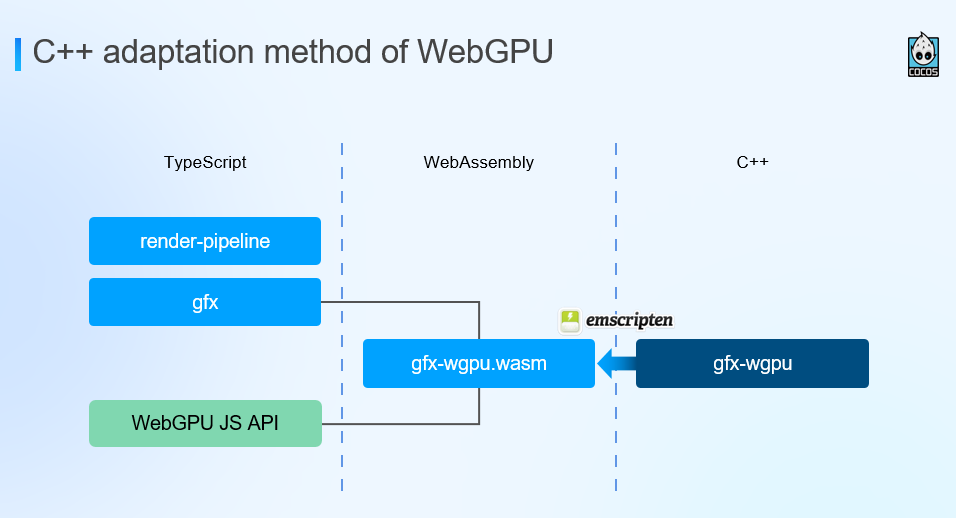

We later pushed to a C++ layer usage plan, implemented our own gfx-wgpu at the C++ layer, compiled it into WebAssembly through Emscripten, and executed all GFX instructions through WebAssembly in the browser.

In the future, we will further compile render-pipeline and GFX into a renderer WebAssembly. Currently, all our renderers, especially the render graph implementation, are already in the C++ layer, so this is a more natural choice. The adaptation route to C++ is because more future-oriented infrastructure is implemented at the C++ layer.

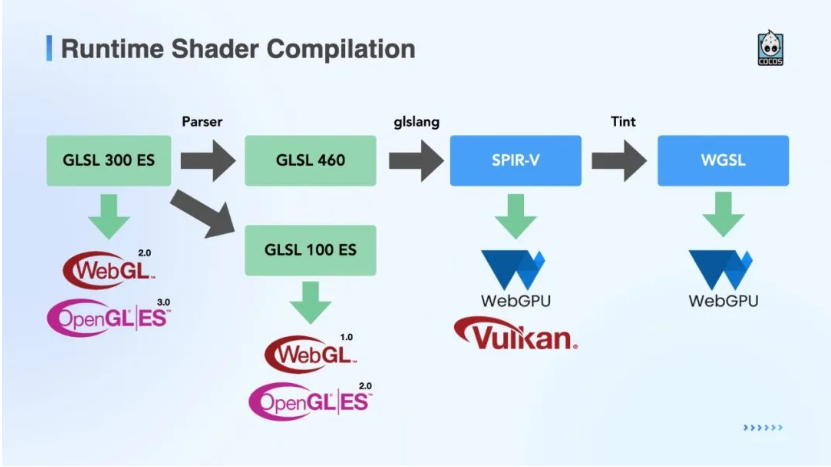

Finally, the shader is also something that needs attention. The above picture shows an adaptation pipeline of our engine. Still, every engine and every application developer needs to face this problem. When you want to adapt WebGPU, we can only use SPIR-V or WGSL presently, and SPIR-V is currently only supported in Chrome for a short time and may be removed soon.

So our current compilation process is as follows: upper-level users write GLSL, which needs to be compiled into GLSL version 460 and version 100. Only version 100 can run on WebGL1. 0 and OpenGL2. 0. 460 version we will continue to compile into SPIR-V. SPIR-V runs briefly on WebGPU and is the primary format we use on Vulkan.

Next, we need to go through Tint. Tint is also a Shader compiler implemented by Chrome in dawn. Compile SPIR-V into WGSL through Tint and run on WebGPU. Therefore, the entire Shader compilation process is long and complicated.

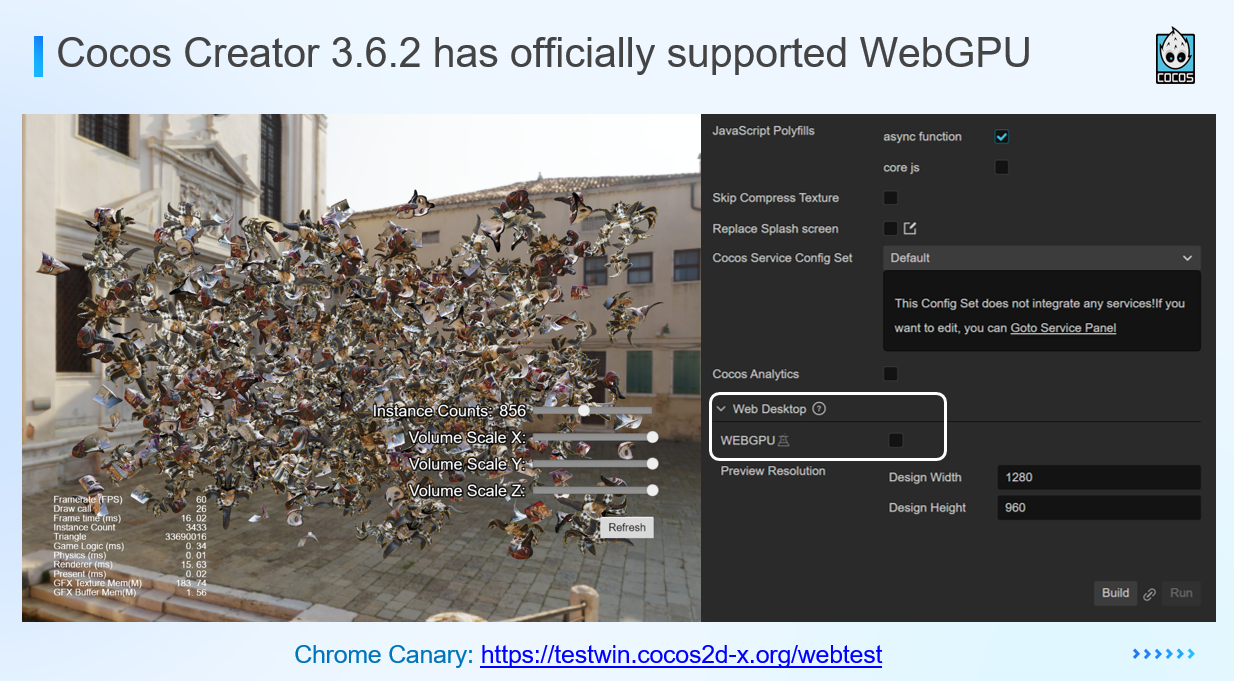

Cocos Creator 3.6.2 has officially supported WebGPU. This can be achieved by checking the WebGPU experimental features when publishing to the Web-Desktop.

Since our GFX encapsulates all backend differences, it is entirely invisible to developers. Developers only need to develop their games and publish them on the latest WebGPU backend without any additional work. This also includes Metal and Vulkan, and you can enjoy the advantages of modern graphics APIs without extra work.

There are more than 30 million triangles in this Demo scene. The accuracy of the model is very high, and the scene is very complex. It can also run very efficiently up to 60 frames.

Finally, I'll share the pitfalls and experiences we encountered in WebGPU adaptation. Currently, WebGPU and WGSL are still in the draft stage, and the API is not yet completely stable, so it is necessary to keep an eye on the progress of the standard.

WebGPU has its own Shader language. So it would help if you considered how to convert GLSL and HLSL to WGSL.

Chromium is currently the fastest adaptation and is most suitable for everyone to debug and develop. See also various tools and implementations in dawn.

The adaptation of WebGPU relies heavily on the Validation layer for debugging and problem exposure, and everyone should try not to ignore some Warnings.

It is recommended to use JavaScript to adapt to the WebGPU API directly at this stage. We chose the WASM solution. In fact, we have many considerations for our own needs because we need to maintain a unified underlying implementation.

Emscripten also brings some troubles. There may be some delays in support of the WebGPU interface, and it is necessary to find problems in the process of trying to solve them.

WGSL does not support a Combined Image Sampler for the time being, which is also a big problem we have encountered. In the traditional pipeline, the texture and sampler declarations are merged together, and you only need to declare one sampler. But in the next-generation graphics API, it is recommended to declare the sampler and texture separately.

Finally, I'll share the architectural goals of Cocos.

First of all, we hope to work hard for the systemic performance ceiling rather than doing single-point performance optimization. After the systemic performance ceiling is raised, developers have more space to play.

The second point is to make cross-platform development as simple and seamless as possible. Developers can directly publish to WebGPU with a single check, which is what we have done exceptionally well.

The third point is an infrastructure designed for the future. This is why we support WebGPU. At the end of 2016, we preferred more modern APIs such as Vulkan and Metal rather than GL-based. So our design is more based on the Vulkan and Metal infrastructure.

The fourth point is to bring a powerful next-generation graphical interface to Web 3D content developers.

The last is to unify the infrastructure and reduce engine maintenance costs. This is why the WebAssembly solution was chosen to encapsulate Web GPU.

Thank you all for reading.